Researchers train Myo armbands to translate sign language into text

Researchers from Arizona State University have used a pair of Myo armbands, developed by Waterloo, Ontario’s Thalmic Labs, to translate American sign language into text.

Researchers from Arizona State University have used a pair of Myo armbands, developed by Waterloo, Ontario’s Thalmic Labs, to translate American sign language into text.

The research team’s project, which they call Sceptre, is a gesture and sign language recognition system that aims to facilitate communication between deaf and hearing people.

The app can represent gestures on screen as text, or use reader software to speak it aloud.

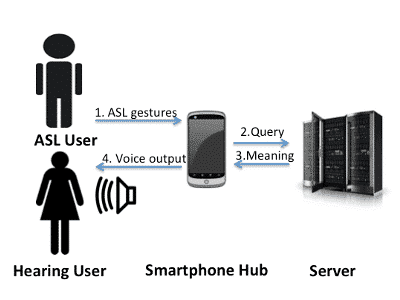

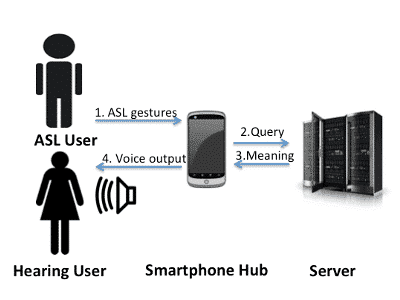

Using two Myo armband devices, a hub for data collection and a server for processing, the Sceptre app begins pre-processing a gesture as soon as it detects the gesture’s end, which is then aggregated into one data-table and stored in a file as an array of time-series data.

“The main challenge of this research was to develop a method to store, retrieve and most importantly match gestures effectively, conclusively and in real-time,” write the researchers in a paper to be presented at an intelligent user interface conference in March.

The researchers note the non-invasive nature of the armband, and indicate that in future this technology could be developed using smartwatches equipped with EMG sensors working in combination with smartphones.

“Communication and collaboration between deaf people and hearing people is hindered by lack of a common language,” write the researchers. “Although there has been a lot of research in this domain, there is room for work towards a system that is ubiquitous, non-invasive, works in real-time and can be trained interactively by the user. Such a system will be powerful enough to translate gestures performed in real-time, while also being flexible enough to be fully personalized to be used as a platform for gesture based HCI.”

The system is trained using between one and three instances for each of 20 randomly chosen ASL sign language gestures and can be programmed for user-generated custom gestures.

Aside from recognizing numbers from 1 through 10, the team had users gesture a variety of words, which the system recognized 97.72 % of the time.

The Youtube video below shows researcher Prajwal Paudyal demonstrating how the system works.

Terry Dawes

Writer