Editors Note: This is Part Two of a special feature is brought to you courtesy Industrial Alliance Securities and analyst Blair Abernethy. It will run in multiple parts over the next two weeks in Cantech Letter. For part one and to read disclaimers click here.

Editors Note: This is Part Two of a special feature is brought to you courtesy Industrial Alliance Securities and analyst Blair Abernethy. It will run in multiple parts over the next two weeks in Cantech Letter. For part one and to read disclaimers click here.

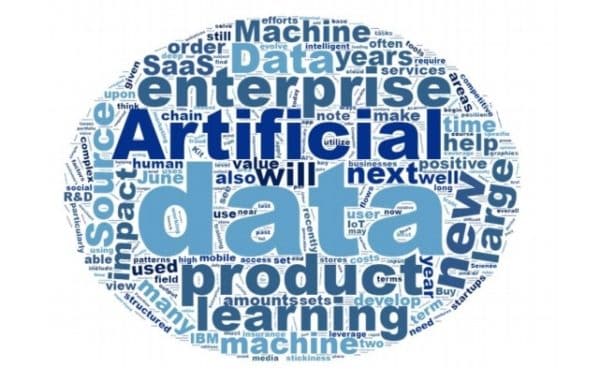

Why is AI (Finally) Happening Now?

AI research efforts have been underway for many decades, although university and technology industry research funding had been largely curtailed as early promises of the technology failed to deliver or were thought to be disproven. AI algorithm development in the early days was also greatly constrained by a lack of adequate computing power and limited access to the large data sets necessary to train and test AI applications.

In recent years, the combination of several important factors has helped to rejuvenate interest in AI research and attract capital investment in AI venture start-ups and internal enterprise R&D projects. Key factors for AI’s resurgence include:

1. Significant advances in machine learning approaches and new techniques for more rapidly constructing and training complex algorithms;

2. The development of very powerful and inexpensive cloud computing resources and the employment of powerful graphics processing units (“GPUs”), which are now widely recognized as being very well suited to running AI programs. Today, several companies are developing CPUs specifically designed for running AI algorithms, and, longer term, Quantum Computing will likely also be utilized to build AI models. In addition, cluster computing technologies, such as Apache Spark and MapReduce, enable the development of complex machine learning models utilizing very large data sets;

3. The availability of very large structured and unstructured data sets. These data sets have been created by enterprise “big data” projects utilizing technologies such as Hadoop, the growing and widespread adoption of enterprise SaaS platforms, the growth of on-line retailers, massive social media networks, IoT sensor data and the proliferation of mobile technologies.

In addition to the above key factors, an increasingly more open approach to enterprise and cloud-based APIs (Application Programming Interface) and competition in cloud computing services have also helped spur on the development of AI.

We note that IBM Watson famously competed on Jeopardy! in February 2011 (the IBM Watson group was later formed in January 2014), an event that helped to significantly raise the public profile of recent advances in AI technology. Watson is IBM’s analytical computing system (see Exhibit 9) that is now being used in many different applications across multiple vertical industries.

Finally, in the last two years, universities, incubators, leading software companies, and venture capitalists have all been significantly increasing their funding of advanced data science research and new AI-related ventures, as evidenced by the proliferation in north American AI technology start-ups shown in Exhibits 10 and 11. Besides rapid traditional venture capital investment growth, US corporate venture capital investment in AI is up 7x since 2013, reaching $1.77B in 2016 (126 deals) and $1.8B in 1H17 (88 deals), according to CB Insights. As in previous technology cycles, we anticipate that many of these start-ups will fail or be absorbed into the mainstream (positive cash flowing) enterprise software industry and other industry leaders.

Should AI be Considered a General Purpose Technology?

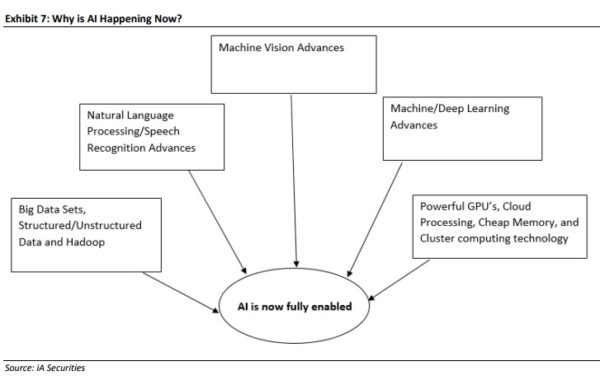

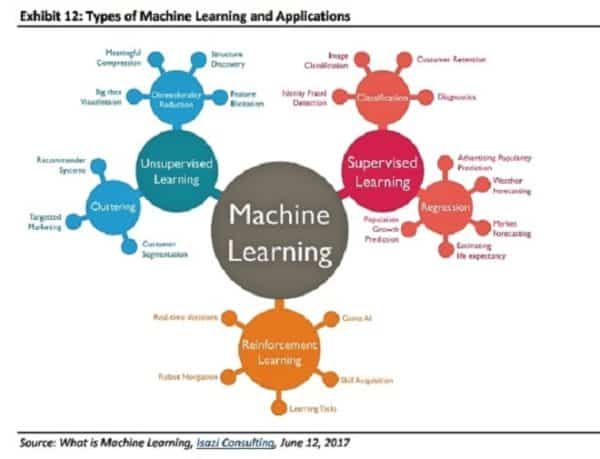

As discussed earlier, the field of AI is comprised of several different sub-disciplines, all of which are continuing to develop and rapidly evolve. We believe that AI should be considered a “general purpose” technology. That is, AI is a very broadly applicable set of technologies that can be used to automate, augment, and enhance countless organizational workflows, processes, decisions, and software applications. In some respects, we believe that AI can also be viewed as a foundational software tool as versatile as a spreadsheet or engineering Computer-Aided Design (“CAD”) tool.

AI solutions can be deployed to outright replace repetitive human tasks, for both skilled and unskilled workers, or to augment the skills of workers involved in complex operations. In general, AI currently works well when automating repetitive highvolume, low-value-added tasks, such as straightforward consumer product and service inquiries to call centres or internal IT help desks and HR department requests.

To be effective, AI solutions in use today typically require significant amounts of input or training data, such as historical business transactions, images (e.g., medical), or consumer retail purchasing data. With large amounts of data, AI algorithms can be rapidly trained to perform analysis, predict outcomes, provide recommendations, or make decisions. Importantly, data sources (aided by advances in distributed database technologies) are rapidly increasing as connected mobile devices, new IoT solutions, and the adoption of cloud-based applications (social, SaaS, etc.) continue to proliferate. In the future, as AI tools and methods improve, AI will likely require less data to be effective and several AI technologies will be combined to solve higher level, more complex process problems.

How is AI Being Applied Today?

AI application development is currently taking place in a variety of settings, including AI startups (building AI-enabled solutions for vertical and horizontal markets), university-sponsored AI incubators, custom projects within corporate IT departments, and throughout both the traditional enterprise software and the consumer-focused software industry. New applications of AI technology are being touted in the media almost daily, however, we will note just a few examples here (also see Exhibit 13):

•Improved accuracy in credit card fraud detection;

•Investment portfolio management recommendations;

•Disease diagnosis based on medical imaging;

•Self-driving vehicles;

•Facial recognition for security uses;

•Automated image labelling and classification;

•Call centre automation and support;

•Voice- and text-based language translation;

•Product recommendations for on-line shoppers;

•Supply chain and manufacturing planning optimization;

•Predicting industrial equipment failure; and

•Monitoring large volume data flows from IoT networks.

Stay Tuned for Part Three in this series: “How Might AI Impact the Incumbent Enterprise Software Industry? “

Leave a Reply

You must be logged in to post a comment.

Share

Share Tweet

Tweet Share

Share

Comment