Montreal computational imaging company Algolux has been working on a problem for the past two years that is likely to have an impact on the next generation of consumer electronics, as well as on image sensors that are increasingly being deployed throughout the nascent Internet of Things infrastructure.

Montreal computational imaging company Algolux has been working on a problem for the past two years that is likely to have an impact on the next generation of consumer electronics, as well as on image sensors that are increasingly being deployed throughout the nascent Internet of Things infrastructure.

The field of computational imaging, or mathematical image treatment accomplished via an algorithm, has really only existed for the past 10 to 15 years and has so far been very much relegated to academic research departments.

But as computational power has become more accessible, and as cameras have become embedded in phones and other portable electronics, some of those researchers have started to migrate out of universities and into the world world of consumer devices and sensor-dependent technologies.

Incorporated in April 2014, Algolux has its origins in two patent portfolios containing IP developed by teams working separately at the University of British Columbia and Columbia University in New York, which was then converged under the umbrella of what they refer to as “deconvolution” or image deblurring.

Cantech Letter dropped by the Algolux office, located in the giant old Northern Electric Company building, now referred to as Le Nordelec and home to dozens of small businesses, to chat with company CEO Allan Benchetrit on the origins of the company’s technology and its future applications.

“Initially, it was really a deblurring story,” Benchetrit tells me. “That was the technology that we had. And even though it was still in very raw form, that was what we were demonstrating at the time. The use-case was basically a BlackBerry device taking an image, and because it didn’t have a great camera on it, we were able to show, through processing on a laptop, that we could actually deblur that image.”

Deblurring an image? You might think of that as a problem that has already been solved, but to think of Algolux’s technology as merely a form of image sharpening is to miss its potential market value.

“For us, what we realized is that for the software to fulfill its biggest potential, we would have to go after larger economic problems than simply quality aesthetics for consumers, because that wasn’t going to fly,” he says.

The Algolux team began working with TandemLaunch, a Montreal incubator specializing in bleeding-edge, deep technology with an emphasis on computer vision.

TandemLaunch doesn’t work like most incubators, trying to attract entrepreneurs eager to develop their SaaS ideas or apps.

TandemLaunch instead sends scouts to research-based university departments, with an eye towards discovering technology and then offering to take an option towards its commercialization, assembling a team around each of them.

Shortly afterwards, the Algolux team found itself at the 2014 Consumer Electronics Show in Las Vegas, where they attracted attention from a few leading smartphone vendors and consumer electronics goods companies.

Then, in April 2014, Algolux incorporated. With support from TandemLaunch and through Benchetrit’s efforts, the company managed to raise a little more than $2.5 million in seed investment, led by Real Ventures and a handful of other angel investors.

“During the course of raising financing, we realized that we were pitching an algorithm, not really a company,” says Benchetrit. “We really wanted to have our own identity beyond the IP that we had brought in. So we started to build a much bigger vision for the company, around an image processing platform that would create somewhat of a paradigm shift in the way that images have traditionally been processed.”

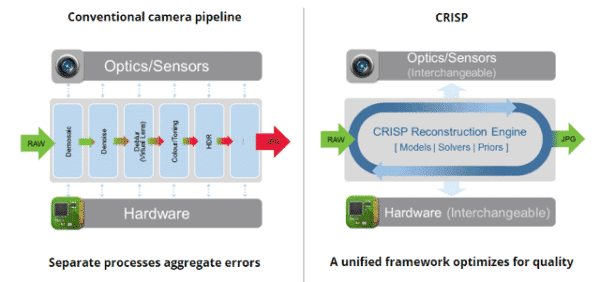

The way smartphones work today, image stabilization technology is largely hardware-based.

What we’re doing is addressing specific problems that the manufacturers of the devices, or cameras, are suffering from.

Image blurring can be produced by a variety of sources, including very subtle hand-held camera shake, or a subject who can’t keep still, low light, bad lens alignment, and any number of other factors.

Algolux, rather than applying a mechanical form of image stabilization, proposed to do it with software, betting that if you can model the shake, or model any other type of image aberration, then you can remove the aberration using an algorithm.

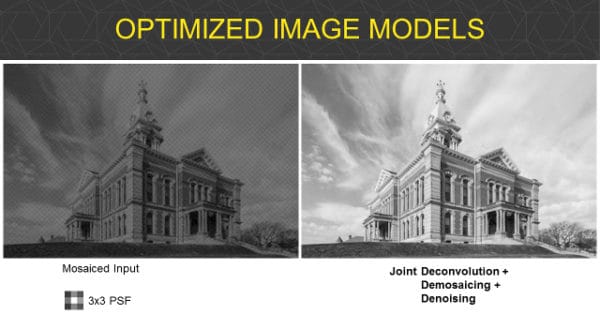

Using what Benchetrit refers to as an “algorithmic framework” during image capture, several mathematical models are applied, as well as “priors”, meaning that the framework understands the difference between a static object and organic materials, almost like Plato’s theory of forms and ideas, recognizing that underneath every table or tree, there’s a model for a table or a tree.

The software then looks at pixels, from the eight million or so present in a smartphone image, which, based on their surrounding pixels, appear to have a colour that has been wrongly assigned, and then assigns them a correct value.

The analogy that occurred to me as I was walking away from the Algolux offices was of the human brain, and its capacity to create a seamless vision that helps us make sense of the world from the raw imagery that would otherwise look meaningless if gathered through the two gelatinous eyeballs embedded in our faces and then processed by a run-of-the-mill sensor.

That kind of instant, on-the-fly processing of images is something that our brain does all the time, without our noticing it.

But the same kind of image processing for the technologies we increasingly rely upon to help other devices make sense of the world for us is something that is still very much a work in progress.

Benchetrit and his team came up with the acronym CRISP, or Computationally Reconfigurable Image Signal Platform, which ties in nicely with the idea of delivering “crisp” images, but is also a nod to the traditional camera term ISP, which has historically stood for Image Signal Pipeline.

“What we’ve done with CRISP is kind of reinvented how the image processing works by actually building an algorithm,” he says. “It basically took a team of seven or eight PhDs close to two years, I guess. What we’ve been doing from a commercialization standpoint is, rather than taking this ISP and trying to license that to market, which would be a very complex task for a company of our size, we’re essentially using the framework to solve very relevant, very well-known problems in image processing.”

And while CRISP’s real value may appear to have most direct relevance in improving image quality on consumer smartphones, it’s the company’s willingness to solve other types of computer imaging problems that hints at its larger potential.

Cars, for example, are now being built with computer vision cameras that surround the automobile, for blind spot detection or lane changes.

The need for computer vision, which doesn’t exist to help a driver but instead to help the car to make decisions based on pattern detection, will only increase as self-driving cars gradually become the norm.

But there are other physical issues with direct applications relevant to developing that technology in a context that will be familiar to Canadians that Algolux’s algorithm is in a unique position to solve.

“When these cameras, that are on the outside of the vehicle, hit extreme temperatures, really cold or really hot, the lenses will actually either contract or expand,” says Benchetrit. “And the minute that happens, if you have a micron’s worth of separation between the lenses, you have a problem, because that camera will no longer be able to consistently and accurately recognize the pattern that it was meant to recognize, which renders it useless and, obviously, dangerous in a real-life situation.”

Instead of applying a mechanical solution to fixing the problem posed by cameras unable to collect useful information in extreme temperatures, Algolux’s software can correct those aberrations and distortions in real time.

Algolux approaches companies who are likely to be experiencing these very real-world image processing problems, and then offers to start a testing cycle with them and develop a prototype for solving that problem, through which commercial or joint development agreements can be put in place.

“There’s a reason that we’re not dealing directly with consumers, like building a consumer-facing app,” says Benchetrit. “Most people are more than happy with the images they’re taking, and probably would not pay more for additional quality. That’s why what we’re doing is addressing specific problems that the manufacturers of the devices, or cameras, are suffering from.”

So Algolux focuses on use-cases, rather than on a particular vertical, solving problems that might be relevant to the automotive industry as much as it is to smartphone makers or sensor manufacturers.

We started to build a much bigger vision for the company, around an image processing platform that would create somewhat of a paradigm shift in the way that images have traditionally been processed.

Another vertical Algolux is beginning to work with is RGB-IR sensors common in virtual or augmented reality headset technology, RGB being the spectrum of light that’s visible to the human eye and IR being infrared.

“We’re currently working with some of the leading vendors in that space, to basically see if we can help move that R&D along,” says Benchetrit.

For existing augmented reality headsets, RGB-IR is now separated into two different sensors, which takes up quite a bit of real estate and makes the headset form factor fairly bulky.

Algolux has found that its framework is well-suited to addressing the problems inherent in collapsing those two sensors into one.

While the prospect of improved virtual reality headset technology is certainly well-publicized when it comes to video games, the market becomes much larger and more exciting from a commercial standpoint when you consider all of the applications for headsets used in a variety of industrial contexts, like manufacturing or warehouse inventory.

“The way that we’re attacking it is by working with the sensor manufacturers themselves,” says Benchetrit, “because in theory they’re hearing from their largest customers what the pain points are, and if we can help them solve that, then that will just make them more competitive.”

That example of using software to enable sensors to function in difficult physical circumstances, or to make augmented reality technology easier to use, falls into the broad category of Internet of Things applications that improves people’s lives without them even knowing that a problem is being solved, or that there was ever a problem to begin with.

Leave a Reply

You must be logged in to post a comment.

Share

Share Tweet

Tweet Share

Share

Comment